Large language models (LLMs) are now advanced programs that can write many types of creative material, translate languages, produce text of human quality, and provide meaningful answers to your questions. But in order to fully utilise these models, a strong framework that can link them to other resources and data sources is needed. LangChain can help with that.

In this blog post, we’ll explore what is LangChain, its key features, and how it can be used to build cutting-edge AI applications.

What is LangChain?

LangChain is an open-source framework that makes building AI-powered applications a breeze by connecting large language models (LLMs) with external tools and data sources. Simply speaking, it helps developers create more advanced and interactive LLM-powered applications by leveraging the features of LLMs, including OpenAI’s GPT-4.

At its core, LangChain acts as a bridge between LLMs and other data or systems.

Importance of LangChain in the AI development landscape

Imagine you’re working with an LLM that can generate human-like text. On its own, it’s impressive but limited in scope. By connecting this LLM to external databases, APIs, or even other AI models, you may improve it with LangChain and create a smooth process that automates difficult activities. When creating apps that use LangChain agents, this is quite helpful.

For example, if you need a chatbot that can pull real-time data from a custom database, LangChain facilitates that connection, allowing the LLM to retrieve up-to-date information.

LangChain is primarily designed for developers familiar with Python, JavaScript, or TypeScript. So, if you’re a software engineer or data scientist with experience in these languages, you can quickly start using LangChain to build AI-driven solutions.

LangChain Founders and background

Originally launched in 2022 as an open-source project by Harrison Chase and Ankush Gola. Originally launched in 2022 as an open-source project by Harrison Chase and Ankush Gola. LangChain has the ability to simplify the process of integrating LLMs into real-world applications and so, it rapidly evolved and gained traction. LangChain offers the foundation to enable you to create creative content, automate processes, or build chatbots.

Want to know more about LangChain? Enrol in our LanChain Certification course today!

Why is LangChain Important?

LangChain simplifies the process of developing AI-powered apps by streamlining how LLMs interact with vast amounts of data. LLMs, like OpenAI’s GPT-3 or GPT-4, are pre-trained on a set of data, but they can’t access data beyond their training set unless you connect them to external data sources. LangChain bridges that gap, making it a key player in the future of LLM-powered applications.

For example, suppose you are developing a chatbot that requires current data. A language model may not have the most recent data when used alone, but by integrating with LangChain, the model may obtain real-time data from sources such as Wikipedia, Google Search, and even custom databases. This makes LangChain crucial for developers who want to create AI systems that continuously learn and evolve with new information.

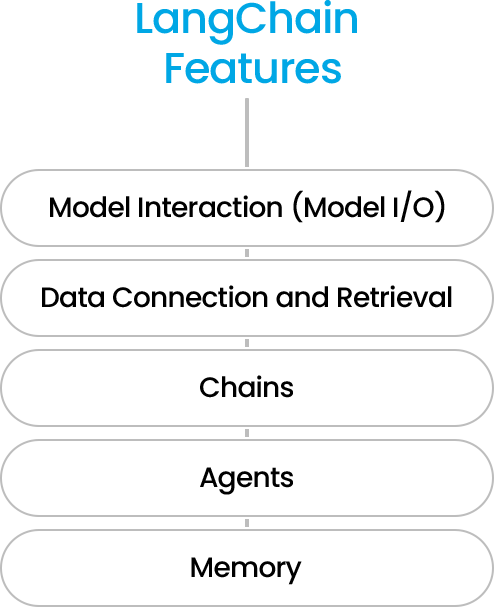

LangChain Features

LangChain features a variety of tools that make it highly versatile for developers. Here’s a breakdown of its core modules:

1. Model Interaction (Model I/O)

This module allows LangChain to interface with any LLM, handling tasks like managing inputs and extracting outputs. This feature guarantees smooth integration regardless of the model you’re using—GPT, Hugging Face, or any other.

2. Data Connection and Retrieval

LangChain enables LLMs to access, store, and retrieve data efficiently. This is crucial when you’re dealing with large datasets or need real-time updates.

3. Chains

You might need to link several LLMs or other tools when creating complex apps. This is called LLM chains. LangChain’s “Chains” module orchestrates this process, allowing you to create more advanced workflows by chaining models and components.

4. Agents

LangChain agents take problem-solving to the next level. Instead of simply generating responses, these agents decide the best steps to take, running a series of commands across LLMs and other tools to solve more complex tasks. These enhance LangChain applications by solving complex tasks through decision-making steps.

5. Memory

The memory module allows LangChain to add short-term or long-term memory to your applications. This means your AI can remember previous interactions, creating more contextual and personalised user experiences.

LangChain Integrations

When it comes to integrations, LangChain excels. Along with connecting LLMs, LangChain also enables you to link LLMs to a range of cloud platforms, APIs, and other data sources.

Some of the most popular LangChain integrations include:

- LLM Providers: LangChain works with models from Hugging Face, Cohere, OpenAI, and more.

- Data Sources: You can use information from Apify Actors, Wikipedia, and Google Search to give your AI real-time access to accurate, current knowledge.

- Cloud Platforms: LangChain supports integrations with cloud services like AWS, Google Cloud, and Microsoft Azure.

- Vector Databases: LangChain can store and retrieve high-dimensional data, such as images, videos, and long text documents, by connecting with vector databases such as Pinecone.

What is LangChain Expression Language (LCEL)?

LangChain Expression Language (LCEL) is a declarative language and with this engineers can join chains more readily. It was designed to help developers take prototypes into production with minimal code changes. LCEL optimises time-to-first-token, ensuring faster output from LLMs, and supports both synchronous and asynchronous APIs.

With LCEL, you can quickly deploy complex workflows in production without sacrificing speed or flexibility.

LangChain Prompt Engineering

Making engaging prompts is crucial when working with LLMs, and LangChain prompts make this process easier. LangChain prompt engineering comprises creating structured input templates to guide the LLM to deliver accurate, relevant, and creative responses.

What is LangChain Used For?

So, what is LangChain used for? The framework allows developers to build a wide variety of AI-powered applications. Whether you’re creating NLP apps, customer service chatbots, or data analysis tools, LangChain offers the flexibility to meet your needs.

Why Consider Using LangChain?

Despite their strength, LLMs are unable of reasoning. LangChain can help with that. It enables developers to provide the model’s output meaning and rationale by breaking down complicated issues into smaller, more manageable jobs. This is particularly helpful for creating agents that can respond to complex questions.

For example, if you ask an LLM to identify the top-performing branches in your chain of stores, it might generate an SQL query based on its training data. With LangChain, however, the LLM can go further—using predefined functions and logical steps to deliver a single, accurate answer.

How LangChain Works

LangChain works by connecting a series of components called “links” in a sequential workflow, known as a chain. Every link in the chain carries out a distinct function, such as processing the LLM’s output, obtaining data from a source, or preparing user input. By chaining together multiple steps, you can create more complex applications that are capable of sophisticated reasoning and dynamic responses.

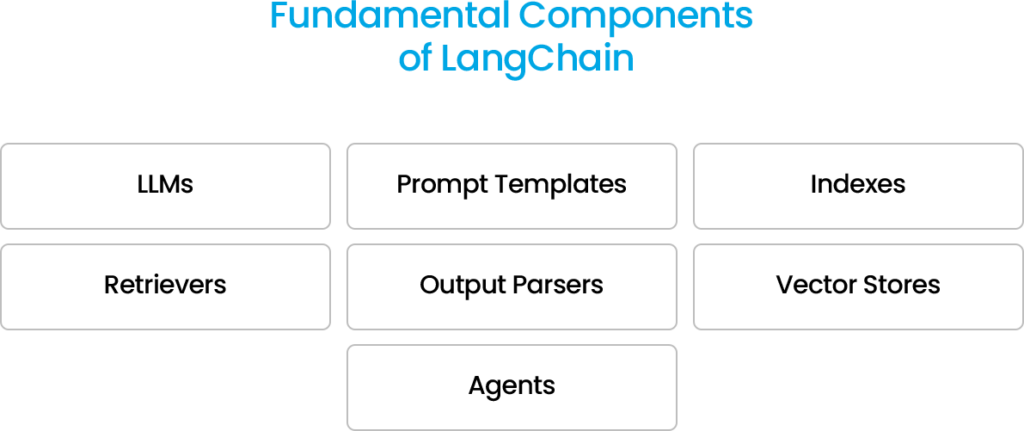

Fundamental Components of LangChain

- LLMs: Large language models like GPT-3 or GPT-4 that are pre-trained on vast datasets of text and code.

- Prompt Templates: These templates help format user inputs so the LLM can understand and respond accurately. For example, you might use a prompt template to include the user’s name and context in a chatbot.

- Indexes: These are databases that store information about the training data, including metadata and document connections.

- Retrievers: Algorithms that locate relevant information within an index, improving the speed and accuracy of the LLM’s responses.

- Output Parsers: These tools format the LLM’s output, ensuring the responses are clear and actionable.

- Vector Stores: Used for storing high-dimensional data, vector stores enable applications to quickly retrieve information relevant to complex queries.

- Agents: Programs with the ability to reason and break down tasks into smaller subtasks, guiding a chain of events to guarantee the best result.

If you’re looking to understand how large language models (LLMs) work then you may find our LLM Certification Course valuable.

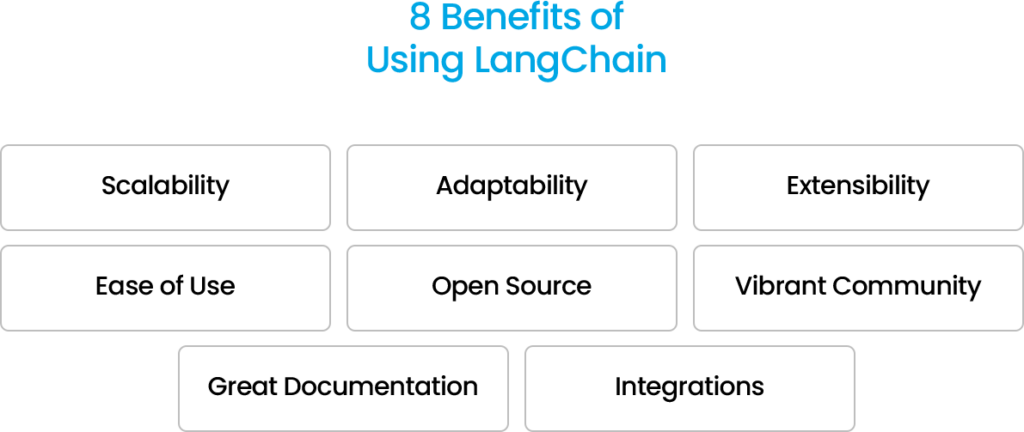

8 Benefits of Using LangChain

1. Scalability

LangChain helps you build applications that handle large data volumes effortlessly.

2. Adaptability

Whether you’re building chatbots or complex systems, LangChain is versatile enough to meet your needs.

3. Extensibility

The flexibility of LangChain allows for the addition of special features and capabilities.

4. Ease of Use

With a high-level API, LangChain makes it easy to link LLMs with data sources and build complex workflows.

5. Open Source

LangChain is free to use and modify, making it accessible to developers around the world.

6. Vibrant Community

There’s a thriving community of LangChain users and developers ready to help.

7. Great Documentation

The comprehensive documentation makes it easy for developers to get started.

8. Integrations

Flask, TensorFlow, and other frameworks and libraries are easily integrated with LangChain.

How to Create Prompts in LangChain

Prompts are the backbone of any interaction with a language model, such as GPT. They act as the input that gives the model instructions on how to answer a question. The output improves with the quality of the prompt. This is where prompt engineering comes in—it’s the art and science of crafting effective prompts to ensure the language model gives you accurate, relevant, and well-written responses.

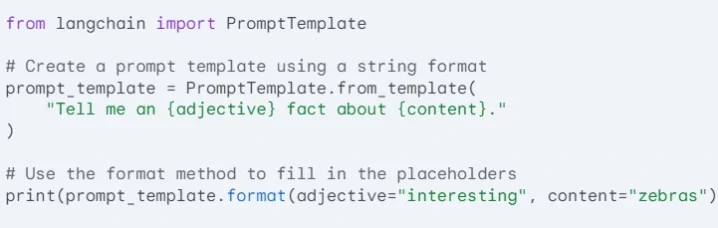

Creating prompts in LangChain is made easy by a prompt template, which serves as a set of instructions for the underlying language model (LLM). These templates vary in complexity, from simple questions to more detailed instructions with examples designed to retrieve high-quality responses.

If you’re working in Python programming, LangChain provides a premade prompt template that’s simple to use. Here’s a step-by-step guide to getting started:

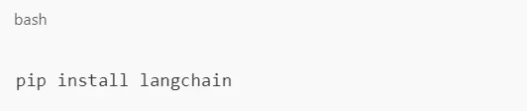

1. Install Python

First, make sure you have the latest version of Python installed. Once that’s done, open your terminal and run the following command to install the basic LangChain requirements:

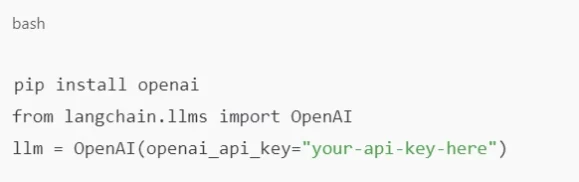

2. Add Integrations

LangChain typically requires integrations, like OpenAI. To use OpenAI’s API, sign up on the OpenAI website and retrieve your API key. Then, install OpenAI’s Python package and input your API key:

3. Import the Prompt Template

Once your environment is set up, import LangChain’s prompt template method with the following code snippet:

Output

In this example, the prompt template uses two input variables—adjective and content—to generate a customised output. The result? The language model will produce an interesting fact about zebras.

How to Develop Applications in LangChain

- Define the Application: Start by identifying the use case for your app. This involves understanding the scope of the project, including any necessary integrations, components, and LLMs you’ll be working with.

- Build Functionality: Use prompts to define the logic and functionality of your app. This is where you can get creative with how the language model interacts with users.

- Customise: LangChain’s flexibility allows you to modify the code, creating custom functionality that meets your specific needs.

- Fine-tune LLMs: Select the appropriate LLM and fine-tune it to suit your application’s needs. This ensures that the language model performs optimally.

- Data Cleansing: Clean and accurate data is critical. Make sure you apply data cleansing techniques and implement security measures to protect sensitive information.

- Test Regularly: Testing your LangChain app throughout the development process helps make sure it functions properly and lives up to your expectations.

Examples and LangChain Use Cases

LangChain applications are versatile and can be applied across industries. Here are a few notable examples:

- Customer Service Chatbots: One of the most popular applications is creating chatbots capable of answering complex questions and handling transactions. These chatbots maintain context, ensuring seamless conversations, much like ChatGPT.

- Coding Assistants: By producing code snippets and offering debugging support, developers can use LangChain to create coding assistants that increase productivity.

- Healthcare: In healthcare, LangChain is helping doctors with diagnoses and automating administrative tasks like scheduling, allowing professionals to focus on patient care.

- Marketing and E-Commerce: Businesses are using LangChain for personalised marketing, such as generating product recommendations and crafting product descriptions that resonate with customers. Personalised product recommendations and dynamic content generation are common LangChain applications.

LangChain OpenAI Integration

LangChain OpenAI integration is one of the most powerful combinations in AI development. By connecting OpenAI models like GPT-4 to LangChain, developers can build intelligent systems that leverage real-time data for more interactive experiences.

How to Get Started with LangChain

You will need to know the fundamentals of Python programming in order to begin using LangChain. Once the framework is installed, you may get started developing applications that use LLM promptly. LangChain’s open-source code is available on GitHub, making it easy to get started with development. Here’s how you can begin:

1. Install LangChain

You can install LangChain with a simple pip command:

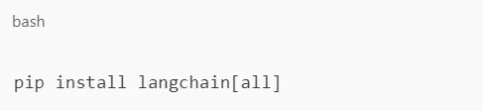

If you want to install all integration requirements at once, use:

2. Create a Project

After installing LangChain, create a new project directory and initialise it:

3. Build a Chain

A chain is a series of linked functions in LangChain. To create one, instantiate the Chain class and add links, like this:

4. Execute the Chain

Use the run() function on the chain object to execute the chain, and retrieve the result using get_output().

What Kind of Apps Can You Create Using LangChain?

LangChain supports a variety of applications:

- Content Generation and Summarisation: Use LangChain to create tools that generate summaries of articles or generate new content from scratch.

- Chatbots: Build chatbots that can interact with users, provide customer support, or even create unique text like poetry, screenplays, or code.

- Data Analysis Tools: LangChain can also be used to develop data analysis applications, helping users identify trends and insights from large datasets.

Is LangChain Open-Source?

Yes! LangChain is open-source, meaning you can access the code on GitHub and build your own apps for free. Plus, LangChain provides pre-trained models to get you started quickly.

Wrap-Up: The Future of LangChain

Right now, one of the primary LangChain use cases is building chat-based apps on top of large language models like ChatGPT. In a recent interview, LangChain’s CEO, Harrison Chase, mentioned that “chat over your documents” is currently the ideal use case.

However, Chase hinted that there could be more exciting interfaces in the future, beyond chat. Developers will have even more resources to create creative applications as LangChain develops, especially for data science challenges.

So, if you haven’t tried LangChain yet, now’s the time to jump in and see what it can do for you!